KYPO is a Cyber Range Platform (KYPO CRP) developed by Masaryk University since 2013. KYPO CRP is entirely based on state-of-the-art approaches such as containers, infrastructures as code, microservices, and open-source software, including cloud provider technology – OpenStack. (source).

KYPO CRP is now part of CONCORDIA consortium. CONCORDIA H2020 is a dedicated consortium of over 52 partners from academia, industry and public bodies. The main objective of the project is to lead the integration of Europe’s excellent cybersecurity competencies into the network of expertise to build the European secure, resilient and trusted ecosystem for the Digital Sovereignty of Europe.

The CONCORDIA project released KYPO CRP as open source in 2020. The release of an open-source cyber range is part of CONCORDIA strategy to build the European Trusted, Secure and Resilient Ecosystem for Digital Sovereignty of Europe.

KYPO Cyber Range Platform is the European Commission’s Innovation Radar Prize Winner in the ‘Disruptive Tech’ category.

In this article, I describe how to install KYPO Cyber Range Platform (CRP) on Openstack and Ubuntu Server running on the AWS cloud. I installed OpenStack on Ubuntu with DevStack.

What is a Cyber Range ?

Cyber Range is a platform for cyber security research and education – it is a simulated

representation of an organization’s network, system, tools, and applications connected

in an isolated environment.

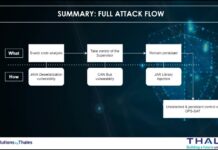

Cyber Range (a sort of modelized network or a digital twin of a real network) allows Adversary Emulation, a type of ethical hacking engagement where the Red Team emulates how an adversary operates, leveraging the same tactics, techniques, and procedures (TTPs), against a target organization.

The goal of these engagements is to improve education but also technology and to do some cyber security research.

Adversary emulations are performed using a structured approach following industry methodologies and frameworks (such as MITRE ATT&CK) and leverage Cyber Threat Intelligence to emulate a malicious actor that has the opportunity, intent, and capability to attack the target organization.

What is DevStack ?

DevStack is a modular set of scripts that can be run to deploy a basic OpenStack cloud for use as a demo or test environment. The scripts can be run on a single node that is baremetal or a virtual machine. It can also be configured to deploy to multiple nodes. DevStack deployment takes care of tedious tasks like configuring the database and message queueing system, making it possible for developers to quickly and easily deploy an OpenStack cloud.

By default, the core services for OpenStack are installed but users can configure additional services to be deployed. All services are installed from source. DevStack will pull the services from git master unless configured to clone from a stable branch (i.e. stable/pike).

Devstack installed keystone, glance, nova, placement, cinder, neutron, and horizon. But DevStack doesn’t install heat, the orchestration service of Openstack which is required by KYPO CRP. So you have to configure DevStack to enable heat.

Unbun Server Installation on AWS Cloud

This is Ubuntu Server 20.04.3 LTS (HVM) with 4vCPU, 16 Go RAM and 55 Gb SSD Disk.

root# lsb_release -a No LSB modules are available. Distributor ID: Ubuntu Description: Ubuntu 20.04.3 LTS Release: 20.04 Codename: focal

DevStack installation

I followed this official tutorial but also this article. So let’s go step by step.

ubuntu$ sudo apt update Fetched 20.6 MB in 4s (5862 kB/s) Reading package lists... Done Building dependency tree Reading state information... Done 31 packages can be upgraded. Run 'apt list --upgradable' to see them.

ubuntu$ sudo apt -y upgrade Found linux image: /boot/vmlinuz-5.11.0-1021-aws Found initrd image: /boot/microcode.cpio /boot/initrd.img-5.11.0-1021-aws Found linux image: /boot/vmlinuz-5.11.0-1020-aws Found initrd image: /boot/microcode.cpio /boot/initrd.img-5.11.0-1020-aws Found Ubuntu 20.04.3 LTS (20.04) on /dev/xvda1 Done

ubuntu$ sudo apt -y dist-upgrade Reading package lists... Done Building dependency tree Reading state information... Done Calculating upgrade... Done 0 upgraded, 0 newly installed, 0 to remove and 0 not upgraded.

ubuntu$ sudo reboot

ubuntu$ sudo useradd -s /bin/bash -d /opt/stack -m stack

ubuntu$ echo "stack ALL=(ALL) NOPASSWD: ALL" | sudo tee /etc/sudoers.d/stack

ubuntu$ sudo su – stack

stack$ sudo su –

root$ su – stack

stack$ sudo apt -y install git Reading package lists... Done Building dependency tree Reading state information... Done git is already the newest version (1:2.25.1-1ubuntu3.2). git set to manually installed. 0 upgraded, 0 newly installed, 0 to remove and 0 not upgraded.

stack$ git clone https://git.openstack.org/openstack-dev/devstack Cloning into 'devstack'... warning: redirecting to https://opendev.org/openstack/devstack/ remote: Enumerating objects: 27621, done. remote: Counting objects: 100% (27621/27621), done. remote: Compressing objects: 100% (9258/9258), done. remote: Total 47887 (delta 26959), reused 18363 (delta 18363), pack-reused 20266 Receiving objects: 100% (47887/47887), 10.19 MiB | 4.03 MiB/s, done. Resolving deltas: 100% (33650/33650), done.

stack$ cd devstack

stack$ vi local.conf

Add:

[[local|localrc]] # Password for KeyStone, Database, RabbitMQ and Service ADMIN_PASSWORD=StrongAdminSecret DATABASE_PASSWORD=$ADMIN_PASSWORD RABBIT_PASSWORD=$ADMIN_PASSWORD SERVICE_PASSWORD=$ADMIN_PASSWORD

Heat is configured by default on devstack for Icehouse and Juno releases. But as mentioned at the beginning, newer versions of OpenStack require enabling heat services in devstack local.conf. I followed this tutorial.

Add the following to [[local|localrc]] section of local.conf:

[[local|localrc]] #Enable heat services enable_service h-eng h-api h-api-cfn h-api-cw

Since Newton release, heat is available as a devstack plugin. To enable the plugin add the following to the [[local|localrc]] section of local.conf:

[[local|localrc]] #Enable heat plugin enable_plugin heat https://opendev.org/openstack/heat

I tried to add a stable branches by specifying the branch name to enable_plugin, but it doesn’t work for me so I didn’t add the following line.

enable_plugin heat https://opendev.org/openstack/heat stable/newton

It would also be useful to automatically download and register a VM image that heat can launch. To do that add the following to [[local|localrc]] section of local.conf:

IMAGE_URL_SITE="https://download.fedoraproject.org" IMAGE_URL_PATH="/pub/fedora/linux/releases/33/Cloud/x86_64/images/" IMAGE_URL_FILE="Fedora-Cloud-Base-33-1.2.x86_64.qcow2" IMAGE_URLS+=","$IMAGE_URL_SITE$IMAGE_URL_PATH$IMAGE_URL_FILE

Disable the Ubuntu Firewall

stack$ sudo ufw disable

I then started the installation of Openstack.

stack$ ./stack.sh

This will take a 15 – 20 minutes, largely depending on the speed of the internet connection. At the end of the installation process, you should see output like this:

========================= DevStack Component Timing (times are in seconds) ========================= wait_for_service 16 pip_install 232 apt-get 264 run_process 27 dbsync 15 git_timed 286 apt-get-update 1 test_with_retry 5 async_wait 72 osc 305 ------------------------- Unaccounted time 155 ========================= Total runtime 1378 ================= Async summary ================= Time spent in the background minus waits: 367 sec Elapsed time: 1378 sec Time if we did everything serially: 1745 sec Speedup: 1.26633 This is your host IP address: xxx.xxx.xxx.xxx This is your host IPv6 address: ::1 Horizon is now available at http://xxx.xxx.xxx.xxx/dashboard Keystone is serving at http://xxx.xxx.xxx.xxx/identity/ The default users are: admin and demo The password: xxxxxxx Services are running under systemd unit files. For more information see: https://docs.openstack.org/devstack/latest/systemd.html DevStack Version: yoga Change: f9a896c6e6afcf52e9a50613285940c26e353ba3 Rehome functions to enable Neutron's QoS service 2021-11-13 19:52:06 +0000 OS Version: Ubuntu 20.04 focal 2021-11-15 20:47:52.095 | stack.sh completed in 1378 seconds.

Copy the Horizon URL shown on the installation output and paste it into your web browser:

http://192.168.10.100/dashboard

Use the default users admin and configured password to login.

I have access to the Horizon web interface dashboard to manage vms, networks, volumes, and images.

Before you can start running client commands, OpenStack RC file must be downloaded from the Horizon dashboard and sourced in the current SHELL environment.

To download OpenStack RC file, log in to the Horizon dashboard. Check that you are in the good project (admin for me) and go to Project > API Access

On the API Access section, use the “Download OpenStack RC File” link to pull and save the « admin-openrc.sh » file on your desktop.

Copy the contents of the file on the server.

stack$ vi admin-openrc.sh

Source the file. As a security mechanism the file won’t contain the user password. You’ll be asked to set the password when sourcing the file.

source admin-openrc.sh Please enter your OpenStack Password for project admin as user admin:

Test some OpenStack client commands just to confirm it is working. Check mainly that heat service is started.

stack$ openstack service list +----------------------------------+-------------+----------------+ | ID | Name | Type | +----------------------------------+-------------+----------------+ | 0b293dc58885450bad190bbfe3bacc40 | nova_legacy | compute_legacy | | 1c05400514e341d09bd5a973136a9789 | cinderv3 | volumev3 | | 3049ac1cc4a84b81a41d9fdb559ce922 | heat | orchestration | | 775998becd0142579289a613a4313e1a | keystone | identity | | 840023d4bc6f4e75a7fdb6e7d49ed28e | placement | placement | | b9a2b39775a94d4f8a5fdfb25b9e4dc1 | neutron | network | | c7b83375dafa428cbc21ceafb8611fbe | heat-cfn | cloudformation | | e62bfc0c37774f8da910b3062df43d53 | cinder | block-storage | | f2ffbf578599481295140dec77bcd549 | nova | compute | | f619511aea824a59a76e66702de4e1c2 | glance | image | +----------------------------------+-------------+----------------+

In order to avoid to set the password each time you source the RC file, you can optionally comment out the lines that prompts you the password and provide it statically:

stack$ vi admin-openrc.sh # With Keystone you pass the keystone password. #echo "Please enter your OpenStack Password for project $OS_PROJECT_NAME as user $OS_USERNAME: " #read -sr OS_PASSWORD_INPUT #export OS_PASSWORD=$OS_PASSWORD_INPUT export OS_PASSWORD='xxxxxxxxxxx'

You can copy the RC file to keystonerc_admin

stack$ cp admin-openrc.sh keystonerc_admin stack$ source keystonerc_admin

You can run some others OpenStack client commands to confirm that all is working properly:

stack$ openstack catalog list +-------------+----------------+----------------------------------------------------------------------------+ | Name | Type | Endpoints | +-------------+----------------+----------------------------------------------------------------------------+ | nova_legacy | compute_legacy | RegionOne | | | | public: http://xxx.xxx.xxx.xxx/compute/v2/d81af43ddd074376a8e7fff88d61c905 | | | | | | cinderv3 | volumev3 | RegionOne | | | | public: http://xxx.xxx.xxx.xxx/volume/v3/d81af43ddd074376a8e7fff88d61c905 | | | | | | heat | orchestration | RegionOne | | | | public: http://xxx.xxx.xxx.xxx/heat-api/v1/d81af43ddd074376a8e7fff88d61c905 | | | | | | keystone | identity | RegionOne | | | | public: http://xxx.xxx.xxx.xxx/identity | | | | | | placement | placement | RegionOne | | | | public: http://xxx.xxx.xxx.xxx/placement | | | | | | neutron | network | RegionOne | | | | public: http://xxx.xxx.xxx.xxx:9696/ | | | | | | heat-cfn | cloudformation | RegionOne | | | | public: http://xxx.xxx.xxx.xxx/heat-api-cfn/v1 | | | | | | cinder | block-storage | RegionOne | | | | public: http://xxx.xxx.xxx.xxx/volume/v3/d81af43ddd074376a8e7fff88d61c905 | | | | | | nova | compute | RegionOne | | | | public: http://xxx.xxx.xxx.xxx/compute/v2.1 | | | | | | glance | image | RegionOne | | | | public: http://xxx.xxx.xxx.xxx/image | | | | | +-------------+----------------+----------------------------------------------------------------------------+

I checked the endpoint list to find public endpoint for orchestration service (heat).

stack$ openstack endpoint list +----------------------------------+-----------+--------------+----------------+---------+-----------+------------------------------------------------+ | ID | Region | Service Name | Service Type | Enabled | Interface | URL | +----------------------------------+-----------+--------------+----------------+---------+-----------+------------------------------------------------+ | 064822424bfe4c4394951dce1832e316 | RegionOne | cinder | block-storage | True | public | http://xxx.xxx.xxx.xxx/volume/v3/$(project_id)s | | 11fbdcab6dfe42cb82c3ac4c3f61296a | RegionOne | nova | compute | True | public | http://xxx.xxx.xxx.xxx/compute/v2.1 | | 2cb9561aa98a4c079d0c7f35ba347647 | RegionOne | keystone | identity | True | public | http://xxx.xxx.xxx.xxx/identity | | 52bcd8dde6fb4f7b82d976cf71a0d37e | RegionOne | cinderv3 | volumev3 | True | public | http://xxx.xxx.xxx.xxx/volume/v3/$(project_id)s | | 82d29e1ceb464b7f831b84434ebb0be3 | RegionOne | glance | image | True | public | http://xxx.xxx.xxx.xxx/image | | 98037666c0e74127ab713bd4865b062d | RegionOne | neutron | network | True | public | http://xxx.xxx.xxx.xxx:9696/ | | 9bda08ed79fe4fc399f94f6274ceaca0 | RegionOne | placement | placement | True | public | http://xxx.xxx.xxx.xxx/placement | | cc35f42f35304534b83301f4fc70e778 | RegionOne | nova_legacy | compute_legacy | True | public | http://xxx.xxx.xxx.xxx/compute/v2/$(project_id)s | +----------------------------------+-----------+--------------+----------------+---------+-----------+------------------------------------------------+

stack$ openstack orchestration service list +-----------------+-------------+--------------------------------------+-----------------+--------+----------------------------+--------+ | Hostname | Binary | Engine ID | Host | Topic | Updated At | Status | +-----------------+-------------+--------------------------------------+-----------------+--------+----------------------------+--------+ | xxxxxxxxxxxxxxx | heat-engine | 5ffc9a0d-2756-462a-8da1-b5f2aeca165b | xxxxxxxxxxxxxxx | engine | 2021-11-15T21:40:55.000000 | up | | xxxxxxxxxxxxxxx | heat-engine | 54387f8e-44d7-4749-853a-c06d6be92ace | xxxxxxxxxxxxxxx | engine | 2021-11-15T21:40:55.000000 | up | +-----------------+-------------+--------------------------------------+-----------------+--------+----------------------------+--------+

KYPO CRP installation

OpenStack Requirements

Check before the OpenStack Requirements here

root# openstack flavor create --ram 2048 --disk 20 --vcpus 1 csirtmu.tiny1x2 +----------------------------+--------------------------------------+ | Field | Value | +----------------------------+--------------------------------------+ | OS-FLV-DISABLED:disabled | False | | OS-FLV-EXT-DATA:ephemeral | 0 | | description | None | | disk | 20 | | id | 69fb4a25-d8f3-4a5b-afbd-8a4823210733 | | name | csirtmu.tiny1x2 | | os-flavor-access:is_public | True | | properties | | | ram | 2048 | | rxtx_factor | 1.0 | | swap | | | vcpus | 1 | +----------------------------+--------------------------------------+

stack$ wget https://cloud-images.ubuntu.com/focal/current/focal-server-cloudimg-amd64.img -P /tmp/ Resolving cloud-images.ubuntu.com (cloud-images.ubuntu.com)... 91.189.88.248, 91.189.88.247, 2001:67c:1360:8001::33, ... Connecting to cloud-images.ubuntu.com (cloud-images.ubuntu.com)|91.189.88.248|:443... connected. HTTP request sent, awaiting response... 200 OK Length: 568131584 (542M) [application/octet-stream] Saving to: ‘/tmp/focal-server-cloudimg-amd64.img.1’ focal-server-cloudimg-amd64.img.1 100%[===========================================================================>] 541.81M 87.5MB/s in 6.2s 2021-11-15 21:50:30 (88.1 MB/s) - ‘/tmp/focal-server-cloudimg-amd64.img.1’ saved [568131584/568131584]

stack$ openstack image create --disk-format qcow2 --container-format bare --public --property \ > os_type=linux --file /tmp/focal-server-cloudimg-amd64.img ubuntu-focal-x86_64 +------------------+--------------------------------------------------------------------------------------------------------------------------------------------------------------------------+ | Field | Value | +------------------+--------------------------------------------------------------------------------------------------------------------------------------------------------------------------+ | container_format | bare | | created_at | 2021-11-15T21:49:14Z | | disk_format | qcow2 | | file | /v2/images/f748c173-c9d7-4ded-92c2-d84d9d6bcd82/file | | id | f748c173-c9d7-4ded-92c2-d84d9d6bcd82 | | min_disk | 0 | | min_ram | 0 | | name | ubuntu-focal-x86_64 | | owner | d81af43ddd074376a8e7fff88d61c905 | | properties | os_hidden='False', os_type='linux', owner_specified.openstack.md5='', owner_specified.openstack.object='images/ubuntu-focal-x86_64', owner_specified.openstack.sha256='' | | protected | False | | schema | /v2/schemas/image | | status | queued | | tags | | | updated_at | 2021-11-15T21:49:14Z | | visibility | public | +------------------+--------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

Base Infrastructure

I then followed this tutorial that describes how to prepare the KYPO base infrastructure used by the KYPO Cyber Range Platform.

stack$ sudo apt install python3-pip openssh-client jq Reading package lists... Done Building dependency tree Reading state information... Done openssh-client is already the newest version (1:8.2p1-4ubuntu0.3). openssh-client set to manually installed. python3-pip is already the newest version (20.0.2-5ubuntu1.6). The following NEW packages will be installed: jq libjq1 libonig5 0 upgraded, 3 newly installed, 0 to remove and 0 not upgraded. Need to get 313 kB of archives. After this operation, 1062 kB of additional disk space will be used. Get:1 http://eu-west-3.ec2.archive.ubuntu.com/ubuntu focal/universe amd64 libonig5 amd64 6.9.4-1 [142 kB] Get:2 http://eu-west-3.ec2.archive.ubuntu.com/ubuntu focal-updates/universe amd64 libjq1 amd64 1.6-1ubuntu0.20.04.1 [121 kB] Get:3 http://eu-west-3.ec2.archive.ubuntu.com/ubuntu focal-updates/universe amd64 jq amd64 1.6-1ubuntu0.20.04.1 [50.2 kB] Fetched 313 kB in 0s (2145 kB/s) Selecting previously unselected package libonig5:amd64. (Reading database ... 140023 files and directories currently installed.) Preparing to unpack .../libonig5_6.9.4-1_amd64.deb ... Unpacking libonig5:amd64 (6.9.4-1) ... Selecting previously unselected package libjq1:amd64. Preparing to unpack .../libjq1_1.6-1ubuntu0.20.04.1_amd64.deb ... Unpacking libjq1:amd64 (1.6-1ubuntu0.20.04.1) ... Selecting previously unselected package jq. Preparing to unpack .../jq_1.6-1ubuntu0.20.04.1_amd64.deb ... Unpacking jq (1.6-1ubuntu0.20.04.1) ... Setting up libonig5:amd64 (6.9.4-1) ... Setting up libjq1:amd64 (1.6-1ubuntu0.20.04.1) ... Setting up jq (1.6-1ubuntu0.20.04.1) ... Processing triggers for man-db (2.9.1-1) ... Processing triggers for libc-bin (2.31-0ubuntu9.2) ...

stack$ sudo pip3 install pipenv

/usr/lib/python3/dist-packages/secretstorage/dhcrypto.py:15: CryptographyDeprecationWarning: int_from_bytes is deprecated, use int.from_bytes instead

from cryptography.utils import int_from_bytes

/usr/lib/python3/dist-packages/secretstorage/util.py:19: CryptographyDeprecationWarning: int_from_bytes is deprecated, use int.from_bytes instead

from cryptography.utils import int_from_bytes

Collecting pipenv

Downloading pipenv-2021.11.15-py2.py3-none-any.whl (3.6 MB)

|████████████████████████████████| 3.6 MB 25.8 MB/s

Requirement already satisfied: virtualenv in /usr/local/lib/python3.8/dist-packages (from pipenv) (20.10.0)

Requirement already satisfied: setuptools>=36.2.1 in /usr/local/lib/python3.8/dist-packages (from pipenv) (59.1.0)

Collecting virtualenv-clone>=0.2.5

Downloading virtualenv_clone-0.5.7-py3-none-any.whl (6.6 kB)

Requirement already satisfied: certifi in /usr/lib/python3/dist-packages (from pipenv) (2019.11.28)

Requirement already satisfied: pip>=18.0 in /usr/local/lib/python3.8/dist-packages (from pipenv) (21.3.1)

Requirement already satisfied: filelock<4,>=3.2 in /usr/local/lib/python3.8/dist-packages (from virtualenv->pipenv) (3.3.2)

Requirement already satisfied: platformdirs<3,>=2 in /usr/local/lib/python3.8/dist-packages (from virtualenv->pipenv) (2.4.0)

Requirement already satisfied: distlib<1,>=0.3.1 in /usr/local/lib/python3.8/dist-packages (from virtualenv->pipenv) (0.3.3)

Requirement already satisfied: six<2,>=1.9.0 in /usr/local/lib/python3.8/dist-packages (from virtualenv->pipenv) (1.16.0)

Requirement already satisfied: backports.entry-points-selectable>=1.0.4 in /usr/local/lib/python3.8/dist-packages (from virtualenv->pipenv) (1.1.1)

Installing collected packages: virtualenv-clone, pipenv

Successfully installed pipenv-2021.11.15 virtualenv-clone-0.5.7

WARNING: Running pip as the 'root' user can result in broken permissions and conflicting behaviour with the system package manager. It is recommended to use a virtual environment instead: https://pip.pypa.io/warnings/venv

Create application credentials with this video. Be sure to generate Application Credentials with the parameter unrestricted.

After application credentials created, download on your desktop the « app-cred-kypo-openrc.sh » file from the Horizon dashboard and copy/paste the contents of the file on the server.

stack$ vi app-cred-kypo-openrc.sh

Source the file

stack$ source app-cred-kypo-openrc.sh

stack$ git clone https://gitlab.ics.muni.cz/muni-kypo-crp/devops/kypo-crp-openstack-base.git Cloning into 'kypo-crp-openstack-base'... remote: Enumerating objects: 269, done. remote: Counting objects: 100% (138/138), done. remote: Compressing objects: 100% (80/80), done. remote: Total 269 (delta 54), reused 129 (delta 47), pack-reused 131 Receiving objects: 100% (269/269), 78.56 KiB | 1.31 MiB/s, done. Resolving deltas: 100% (111/111), done.

stack$ cd kypo-crp-openstack-base

stack$ pipenv install

Creating a virtualenv for this project...

Pipfile: /opt/stack/devstack/kypo-crp-openstack-base/Pipfile

Using /usr/bin/python3.8 (3.8.10) to create virtualenv...

⠴ Creating virtual environment...created virtual environment CPython3.8.10.final.0-64 in 235ms

creator CPython3Posix(dest=/opt/stack/.local/share/virtualenvs/kypo-crp-openstack-base-5QbM23-5, clear=False, no_vcs_ignore=False, global =False)

seeder FromAppData(download=False, pip=bundle, setuptools=bundle, wheel=bundle, via=copy, app_data_dir=/opt/stack/.local/share/virtualenv )

added seed packages: pip==21.3.1, setuptools==58.3.0, wheel==0.37.0

activators BashActivator,CShellActivator,FishActivator,NushellActivator,PowerShellActivator,PythonActivator

✔ Successfully created virtual environment!

Virtualenv location: /opt/stack/.local/share/virtualenvs/kypo-crp-openstack-base-5QbM23-5

Installing dependencies from Pipfile.lock (5ccba9)...

🐍 ▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉ 61/61 — 00:01:39

To activate this project's virtualenv, run pipenv shell.

Alternatively, run a command inside the virtualenv with pipenv run.

stack$ pipenv shell

Creating a virtualenv for this project...

Pipfile: /opt/stack/Pipfile

Using /usr/bin/python3 (3.8.10) to create virtualenv...

⠋ Creating virtual environment...created virtual environment CPython3.8.10.final.0-64 in 619ms

creator CPython3Posix(dest=/opt/stack/.local/share/virtualenvs/stack-mJieuOd4, clear=False, no_vcs_ignore=False, global=False)

seeder FromAppData(download=False, pip=bundle, setuptools=bundle, wheel=bundle, via=copy, app_data_dir=/opt/stack/.local/share/virtualenv)

added seed packages: pip==21.3.1, setuptools==58.3.0, wheel==0.37.0

activators BashActivator,CShellActivator,FishActivator,NushellActivator,PowerShellActivator,PythonActivator

✔ Successfully created virtual environment!

Virtualenv location: /opt/stack/.local/share/virtualenvs/stack-mJieuOd4

Creating a Pipfile for this project...

Launching subshell in virtual environment...

stack$ . /opt/stack/.local/share/virtualenvs/stack-mJieuOd4/bin/activate

((kypo-crp-openstack-base) ) stack$ pipenv sync Installing dependencies from Pipfile.lock (5ccba9)... 🐍 ▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉ 0/0 — 00:00:00 All dependencies are now up-to-date!

Before to go to the deployment, you need to obtain several configuration values that might be specific to your OpenStack instance.

stack$ openstack network list --external --column Name +--------+ | Name | +--------+ | public | +--------+

stack$ openstack image list --column Name +---------------------------------+ | Name | +---------------------------------+ | Fedora-Cloud-Base-33-1.2.x86_64 | | cirros-0.5.2-x86_64-disk | | ubuntu-focal-x86_64 | +---------------------------------+

stack$ openstack flavor list --column Name +-----------+ | Name | +-----------+ | m1.tiny | | m1.small | | m1.medium | | m1.large | | m1.nano | | m1.xlarge | | m1.micro | | cirros256 | | ds512M | | ds1G | | ds2G | | ds4G | +-----------+

Un-Source all variables from previous source command

stack$ unset "${!OS_@}"

Below is the default openstack-defaults.sh file.

stack$ cat openstack-defaults.sh #!/usr/bin/env bash export KYPO_HEAD_FLAVOR="standard.large" export KYPO_HEAD_IMAGE="ubuntu-focal-x86_64" export KYPO_HEAD_USER="ubuntu" export KYPO_PROXY_FLAVOR="standard.medium" export KYPO_PROXY_IMAGE="ubuntu-focal-x86_64" export KYPO_PROXY_USER="ubuntu" export DNS1="1.1.1.1" export DNS2="1.0.0.1"

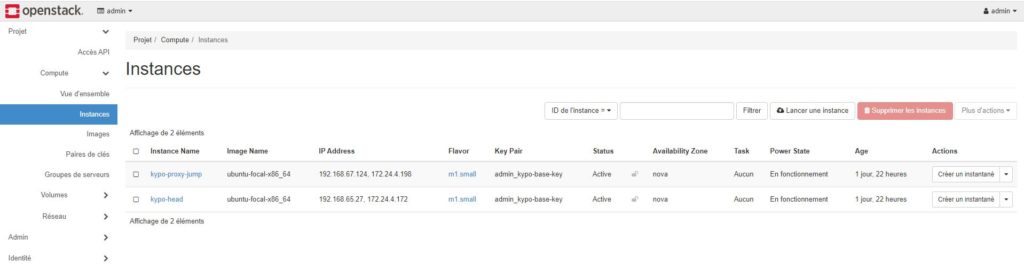

Modify this file and edit the desired values for images (<kypo_base_image>) and flavors (<kypo_base_flavor>). On my side, I remove « standard.large » flavor and « standard.medium » flavor. I replaced them with « m1.small » beause my config is not very strong.

stack$ cat openstack-defaults.sh #!/usr/bin/env bash export KYPO_HEAD_FLAVOR="m1.small" export KYPO_HEAD_IMAGE="ubuntu-focal-x86_64" export KYPO_HEAD_USER="ubuntu" export KYPO_PROXY_FLAVOR="m1.small" export KYPO_PROXY_IMAGE="ubuntu-focal-x86_64" export KYPO_PROXY_USER="ubuntu" export DNS1="1.1.1.1" export DNS2="1.0.0.1"

stack$ source openstack-defaults.sh

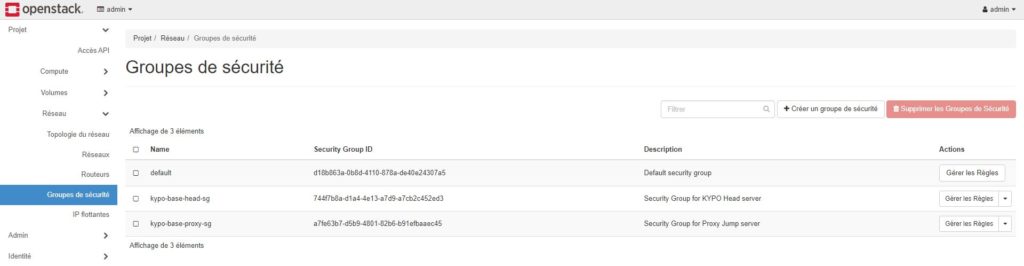

I checked default Security Group Rules. I’m not sure if I have to delete them or not. The tutorial is not clear about this point.

stack$ openstack security group rule list default +--------------------------------------+-------------+-----------+-----------+------------+--------------------------------------+ | ID | IP Protocol | Ethertype | IP Range | Port Range | Remote Security Group | +--------------------------------------+-------------+-----------+-----------+------------+--------------------------------------+ | 38b8c48a-494c-49bd-bee3-51e2c415f30b | None | IPv4 | 0.0.0.0/0 | | d18b863a-0b8d-4110-878a-de40e24307a5 | | 7e5ae97e-cb42-4024-aeef-ed631a2b567c | None | IPv6 | ::/0 | | None | | d8808eb1-1edc-4e3f-8ddb-7061927fe9a3 | None | IPv6 | ::/0 | | d18b863a-0b8d-4110-878a-de40e24307a5 | | de4d2cce-2fee-4e01-b966-5f7420c5d484 | None | IPv4 | 0.0.0.0/0 | | None | +--------------------------------------+-------------+-----------+-----------+------------+--------------------------------------+

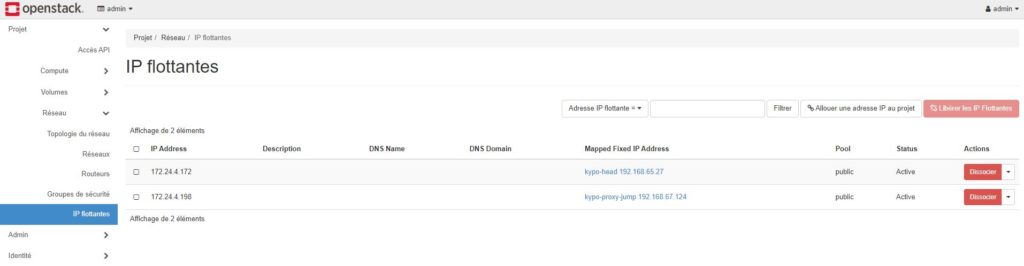

I then bootstraped Floating IPs and Keypair. The results will be saved into kypo-base-params.yml file. Private key of the keypair will be saved into <openstack-project>_kypo-base-key.key

stack$ ./bootstrap.sh public Floating IP kypo-base-head for network public does not exist. Creating... Floating IP kypo-base-proxy for network public does not exist. Creating... No keypair with a name or ID of 'admin_kypo-base-key' exists. Creating keypair admin_kypo-base-key. fingerprint: 86:8f:ea:34:dc:4b:bc:77:a8:6d:d5:7b:42:3c:a4:e4 name: admin_kypo-base-key user_id: 042e20a21d0f4cf2a8473daf72ca2193 Private key for user access does not exist. Creating... Generating RSA private key, 2048 bit long modulus (2 primes) ......................+++++ ..+++++ e is 65537 (0x010001)

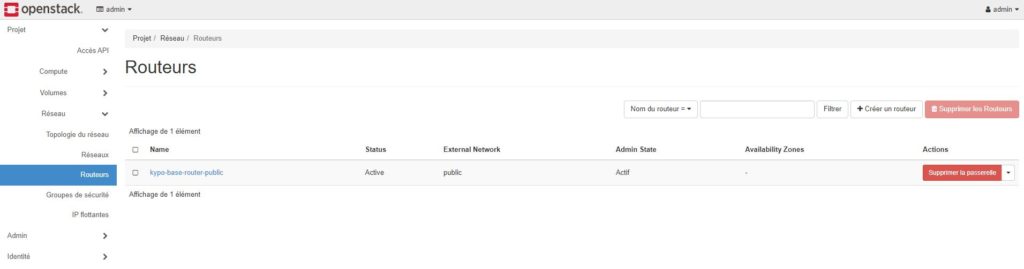

stack$ ./create-base.sh 2021-11-15 23:57:31Z [kypo-base-networking-stack]: CREATE_IN_PROGRESS Stack CREATE started 2021-11-15 23:57:31Z [kypo-base-networking-stack.kypo-base-net]: CREATE_IN_PROGRESS state changed 2021-11-15 23:57:31Z [kypo-base-networking-stack.kypo-base-net]: CREATE_COMPLETE state changed 2021-11-15 23:57:31Z [kypo-base-networking-stack.kypo-base-subnet]: CREATE_IN_PROGRESS state changed 2021-11-15 23:57:32Z [kypo-base-networking-stack.kypo-base-router-public]: CREATE_IN_PROGRESS state changed 2021-11-15 23:57:32Z [kypo-base-networking-stack.kypo-base-subnet]: CREATE_COMPLETE state changed 2021-11-15 23:57:32Z [kypo-base-networking-stack.kypo-base-router-public-port]: CREATE_IN_PROGRESS state changed 2021-11-15 23:57:33Z [kypo-base-networking-stack.kypo-base-router-public-port]: CREATE_COMPLETE state changed 2021-11-15 23:57:34Z [kypo-base-networking-stack.kypo-base-router-public]: CREATE_COMPLETE state changed 2021-11-15 23:57:34Z [kypo-base-networking-stack.kypo-base-router-public-interface]: CREATE_IN_PROGRESS state changed 2021-11-15 23:57:36Z [kypo-base-networking-stack.kypo-base-router-public-interface]: CREATE_COMPLETE state changed 2021-11-15 23:57:36Z [kypo-base-networking-stack]: CREATE_COMPLETE Stack CREATE completed successfully +---------------------+--------------------------------------+ | Field | Value | +---------------------+--------------------------------------+ | id | cb20a1c9-da98-4699-a14e-09b4d2ee78a4 | | stack_name | kypo-base-networking-stack | | description | KYPO base networking. | | creation_time | 2021-11-15T23:57:30Z | | updated_time | None | | stack_status | CREATE_COMPLETE | | stack_status_reason | Stack CREATE completed successfully | +---------------------+--------------------------------------+ 2021-11-15 23:57:42Z [kypo-base-security-groups-stack]: CREATE_IN_PROGRESS Stack CREATE started 2021-11-15 23:57:43Z [kypo-base-security-groups-stack.kypo-base-head-sg]: CREATE_IN_PROGRESS state changed 2021-11-15 23:57:43Z [kypo-base-security-groups-stack.kypo-base-head-sg]: CREATE_COMPLETE state changed 2021-11-15 23:57:44Z [kypo-base-security-groups-stack.kypo-global-ingress-icmp]: CREATE_IN_PROGRESS state changed 2021-11-15 23:57:44Z [kypo-base-security-groups-stack.kypo-global-ingress-icmp]: CREATE_COMPLETE state changed 2021-11-15 23:57:45Z [kypo-base-security-groups-stack.kypo-base-proxy-sg]: CREATE_IN_PROGRESS state changed 2021-11-15 23:57:45Z [kypo-base-security-groups-stack.kypo-base-proxy-sg]: CREATE_COMPLETE state changed 2021-11-15 23:57:45Z [kypo-base-security-groups-stack.kypo-global-remote-security-groups]: CREATE_IN_PROGRESS state changed 2021-11-15 23:57:57Z [kypo-base-security-groups-stack.kypo-global-remote-security-groups]: CREATE_COMPLETE state changed 2021-11-15 23:57:57Z [kypo-base-security-groups-stack]: CREATE_COMPLETE Stack CREATE completed successfully +---------------------+--------------------------------------+ | Field | Value | +---------------------+--------------------------------------+ | id | 0b52e47b-45d8-47cb-aa06-c204feedb038 | | stack_name | kypo-base-security-groups-stack | | description | KYPO base security groups. | | creation_time | 2021-11-15T23:57:42Z | | updated_time | None | | stack_status | CREATE_COMPLETE | | stack_status_reason | Stack CREATE completed successfully | +---------------------+--------------------------------------+ 2021-11-15 23:58:00Z [kypo-head-stack]: CREATE_IN_PROGRESS Stack CREATE started 2021-11-15 23:58:00Z [kypo-head-stack.kypo-head-port]: CREATE_IN_PROGRESS state changed 2021-11-15 23:58:01Z [kypo-head-stack.kypo-head-port]: CREATE_COMPLETE state changed 2021-11-15 23:58:01Z [kypo-head-stack.kypo-head-floating-ip]: CREATE_IN_PROGRESS state changed 2021-11-15 23:58:01Z [kypo-head-stack.kypo-head]: CREATE_IN_PROGRESS state changed 2021-11-15 23:58:01Z [kypo-head-stack.kypo-head-floating-ip]: CREATE_COMPLETE state changed 2021-11-15 23:58:06Z [kypo-head-stack.kypo-head]: CREATE_COMPLETE state changed 2021-11-15 23:58:06Z [kypo-head-stack]: CREATE_COMPLETE Stack CREATE completed successfully +---------------------+--------------------------------------+ | Field | Value | +---------------------+--------------------------------------+ | id | 043f10e3-a13d-4e87-9732-e85e13eb6e6c | | stack_name | kypo-head-stack | | description | KYPO Head server. | | creation_time | 2021-11-15T23:58:00Z | | updated_time | None | | stack_status | CREATE_COMPLETE | | stack_status_reason | Stack CREATE completed successfully | +---------------------+--------------------------------------+ 2021-11-15 23:58:12Z [kypo-proxy-jump-stack]: CREATE_IN_PROGRESS Stack CREATE started 2021-11-15 23:58:12Z [kypo-proxy-jump-stack.kypo-proxy-jump-port]: CREATE_IN_PROGRESS state changed 2021-11-15 23:58:13Z [kypo-proxy-jump-stack.kypo-proxy-jump-port]: CREATE_COMPLETE state changed 2021-11-15 23:58:13Z [kypo-proxy-jump-stack.kypo-proxy-jump-floating-ip]: CREATE_IN_PROGRESS state changed 2021-11-15 23:58:13Z [kypo-proxy-jump-stack.kypo-proxy-jump]: CREATE_IN_PROGRESS state changed 2021-11-15 23:58:14Z [kypo-proxy-jump-stack.kypo-proxy-jump-floating-ip]: CREATE_COMPLETE state changed 2021-11-15 23:58:19Z [kypo-proxy-jump-stack.kypo-proxy-jump]: CREATE_COMPLETE state changed 2021-11-15 23:58:19Z [kypo-proxy-jump-stack]: CREATE_COMPLETE Stack CREATE completed successfully +---------------------+--------------------------------------+ | Field | Value | +---------------------+--------------------------------------+ | id | 479af43a-a6f8-4413-8291-5ce03eb56a4b | | stack_name | kypo-proxy-jump-stack | | description | KYPO Proxy Jump server. | | creation_time | 2021-11-15T23:58:12Z | | updated_time | None | | stack_status | CREATE_COMPLETE | | stack_status_reason | Stack CREATE completed successfully | +---------------------+--------------------------------------+

I checked the stack list

stack$ openstack stack list +--------------------------------------+---------------------------------+-----------------+----------------------+--------------+ | ID | Stack Name | Stack Status | Creation Time | Updated Time | +--------------------------------------+---------------------------------+-----------------+----------------------+--------------+ | 479af43a-a6f8-4413-8291-5ce03eb56a4b | kypo-proxy-jump-stack | CREATE_COMPLETE | 2021-11-15T23:58:12Z | None | | 043f10e3-a13d-4e87-9732-e85e13eb6e6c | kypo-head-stack | CREATE_COMPLETE | 2021-11-15T23:58:00Z | None | | 0b52e47b-45d8-47cb-aa06-c204feedb038 | kypo-base-security-groups-stack | CREATE_COMPLETE | 2021-11-15T23:57:42Z | None | | cb20a1c9-da98-4699-a14e-09b4d2ee78a4 | kypo-base-networking-stack | CREATE_COMPLETE | 2021-11-15T23:57:30Z | None | +--------------------------------------+---------------------------------+-----------------+----------------------+--------------+

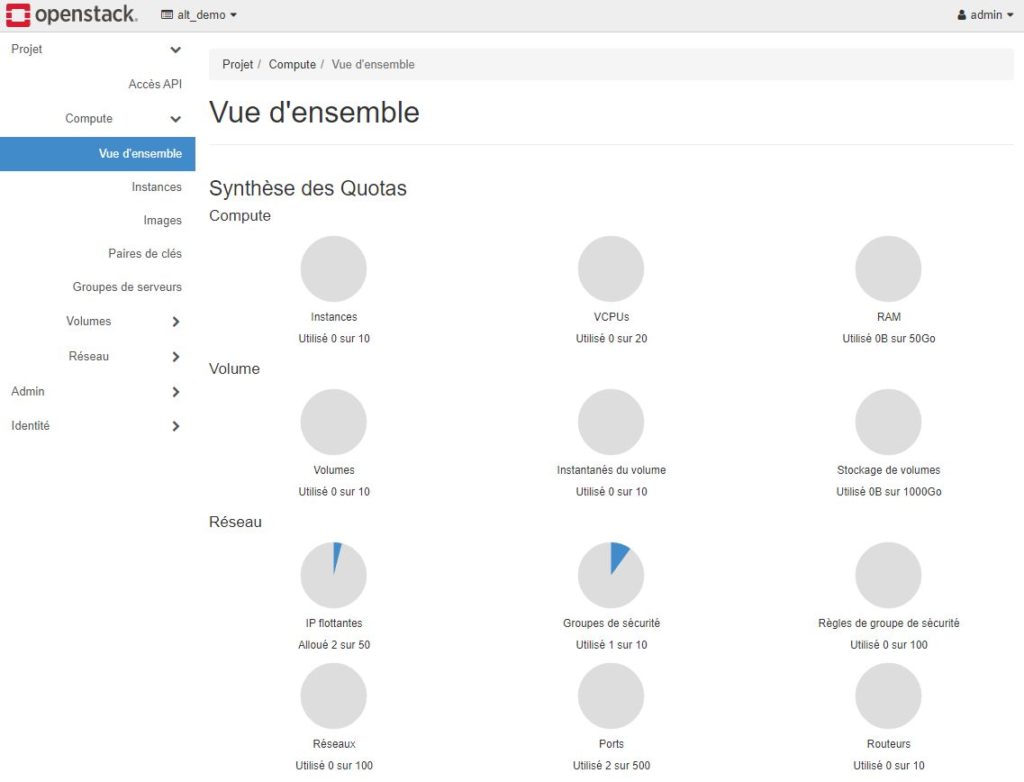

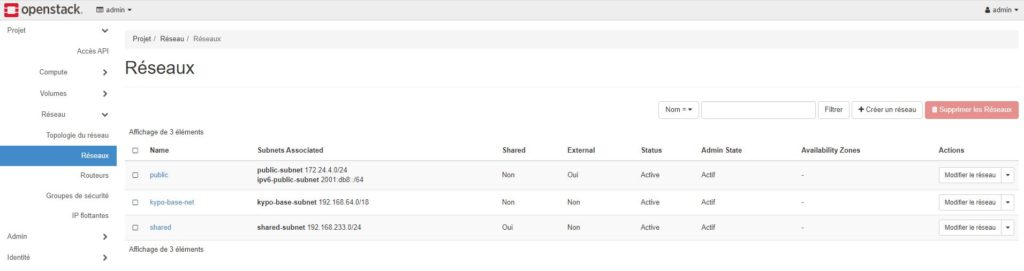

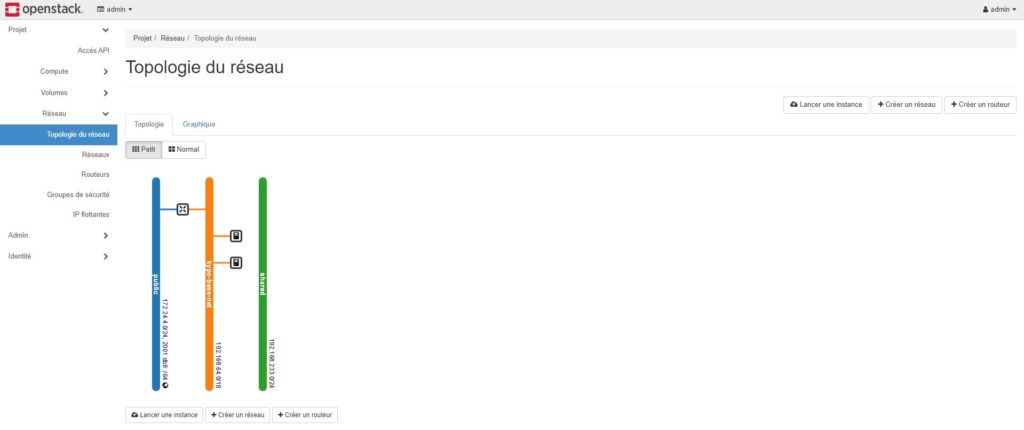

I checked all the installation on GUI Horizon dashboard

I launched Ansible scripts to test connectivity. PING and SSH are OK.

((kypo-crp-openstack-base) ) root@xxxxxxxx:~/kypo-crp-openstack-base# ./ansible-check-base.sh PLAY [Check Base Stack] ********************************************************************************************************************************************************************************************************************* TASK [ping : Wait for ssh connection] ******************************************************************************************************************************************************************************************************* ok: [kypo-base-head] ok: [kypo-base-proxy] TASK [Try to reach the machine via ping] **************************************************************************************************************************************************************************************************** ok: [kypo-base-head] ok: [kypo-base-proxy] PLAY RECAP ********************************************************************************************************************************************************************************************************************************** kypo-base-head : ok=2 changed=0 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 kypo-base-proxy : ok=2 changed=0 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

((kypo-crp-openstack-base) ) root@xxxx:~/kypo-crp-openstack-base# ./ansible-user-access.sh PLAY [Create Access for KYPO User] ********************************************************************************************************************************************************************************************************** TASK [Gathering Facts] ********************************************************************************************************************************************************************************************************************** ok: [kypo-base-proxy] TASK [user : Ensure group kypo] ************************************************************************************************************************************************************************************************************* changed: [kypo-base-proxy] TASK [Ensure user kypo] ********************************************************************************************************************************************************************************************************************* changed: [kypo-base-proxy] TASK [Set authorized key for kypo user] ***************************************************************************************************************************************************************************************************** changed: [kypo-base-proxy] TASK [Add kypo user to sudoers] ************************************************************************************************************************************************************************************************************* changed: [kypo-base-proxy] PLAY RECAP ********************************************************************************************************************************************************************************************************************************** kypo-base-proxy : ok=5 changed=4 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

All seem to be OK.

Now I have a problem. I rebooted my OpenStack server and I lost connectivity between Openstask host and instances VM. Ping and SSH was NOK.

After several researches, I found that a Devstack environment is not persistent across server reboots.

DevStack provides a set of scripts for automated installation of OpenStack on Ubuntu as well as Fedora Linux. It is a tool to help OpenStack developers to quickly set up an OpenStack environment using scripts. These scripts automatically download or clone the required packages and repositories from the OpenStack website that are necessary for setting up an OpenStack cloud. One drawback with this approach is that the environment is not persistent across server reboots.

Newer versions of DevStack runs it’s services as systemd unit files so, you can use systemctl to manage them. I checked Openstask services. All seem to be OK

((kypo-crp-openstack-base) ) root@ip-172-31-6-66:~/kypo-crp-openstack-base# sudo systemctl list-units devstack@* UNIT LOAD ACTIVE SUB DESCRIPTION devstack@c-api.service loaded active running Devstack devstack@c-api.service devstack@c-sch.service loaded active running Devstack devstack@c-sch.service devstack@c-vol.service loaded active running Devstack devstack@c-vol.service devstack@dstat.service loaded active running Devstack devstack@dstat.service devstack@etcd.service loaded active running Devstack devstack@etcd.service devstack@g-api.service loaded active running Devstack devstack@g-api.service devstack@h-api-cfn.service loaded active running Devstack devstack@h-api-cfn.service devstack@h-api.service loaded active running Devstack devstack@h-api.service devstack@h-eng.service loaded active running Devstack devstack@h-eng.service devstack@keystone.service loaded active running Devstack devstack@keystone.service devstack@n-api-meta.service loaded active running Devstack devstack@n-api-meta.service devstack@n-api.service loaded active running Devstack devstack@n-api.service devstack@n-cond-cell1.service loaded active running Devstack devstack@n-cond-cell1.service devstack@n-cpu.service loaded active running Devstack devstack@n-cpu.service devstack@n-novnc-cell1.service loaded active running Devstack devstack@n-novnc-cell1.service devstack@n-sch.service loaded active running Devstack devstack@n-sch.service devstack@n-super-cond.service loaded active running Devstack devstack@n-super-cond.service devstack@placement-api.service loaded active running Devstack devstack@placement-api.service devstack@q-ovn-metadata-agent.service loaded active running Devstack devstack@q-ovn-metadata-agent.service devstack@q-svc.service loaded active running Devstack devstack@q-svc.service LOAD = Reflects whether the unit definition was properly loaded. ACTIVE = The high-level unit activation state, i.e. generalization of SUB. SUB = The low-level unit activation state, values depend on unit type. 20 loaded units listed. Pass --all to see loaded but inactive units, too. To show all installed unit files use 'systemctl list-unit-files'.

I also restarted all services without change

sudo systemctl restart devstack@*

I checked the status of Open Vswitch

((kypo-crp-openstack-base) ) root@ip-172-31-6-66:~/kypo-crp-openstack-base# ovs-vsctl show

95bd0929-30f8-42d8-9a66-699036952e8c

Manager "ptcp:6640:127.0.0.1"

is_connected: true

Bridge br-ex

Port br-ex

Interface br-ex

type: internal

Port patch-provnet-b606a04f-2955-4f7d-807b-3677bb3cb4e3-to-br-int

Interface patch-provnet-b606a04f-2955-4f7d-807b-3677bb3cb4e3-to-br-int

type: patch

options: {peer=patch-br-int-to-provnet-b606a04f-2955-4f7d-807b-3677bb3cb4e3}

Bridge br-int

fail_mode: secure

datapath_type: system

Port br-int

Interface br-int

type: internal

Port tapf147ff8d-9b

Interface tapf147ff8d-9b

Port tapc6517e8f-b0

Interface tapc6517e8f-b0

Port tap7e86de1c-70

Interface tap7e86de1c-70

Port patch-br-int-to-provnet-b606a04f-2955-4f7d-807b-3677bb3cb4e3

Interface patch-br-int-to-provnet-b606a04f-2955-4f7d-807b-3677bb3cb4e3

type: patch

options: {peer=patch-provnet-b606a04f-2955-4f7d-807b-3677bb3cb4e3-to-br-int}

ovs_version: "2.13.3"

((kypo-crp-openstack-base) ) root@ip-172-31-6-66:~/kypo-crp-openstack-base# ovs-vsctl list-ports br-ex patch-provnet-b606a04f-2955-4f7d-807b-3677bb3cb4e3-to-br-int

((kypo-crp-openstack-base) ) root@ip-172-31-6-66:~/kypo-crp-openstack-base# ovs-vsctl list-ports br-int patch-br-int-to-provnet-b606a04f-2955-4f7d-807b-3677bb3cb4e3 tap7e86de1c-70 tapc6517e8f-b0 tapf147ff8d-9b

I found some error but I don’t if it’s important

((kypo-crp-openstack-base) ) root@ip-172-31-6-66:~/kypo-crp-openstack-base# ovs-ofctl dump-ports br-ex 2021-11-29T18:15:59Z|00001|vconn|WARN|unix:/var/run/openvswitch/br-ex.mgmt: version negotiation failed (we support version 0x01, peer supports versions 0x04, 0x06) ovs-ofctl: br-ex: failed to connect to socket (Broken pipe)

((kypo-crp-openstack-base) ) root@ip-172-31-6-66:~/kypo-crp-openstack-base# ovs-dpctl show -s

system@ovs-system:

lookups: hit:8565 missed:364 lost:0

flows: 4

masks: hit:14131 total:2 hit/pkt:1.58

port 0: ovs-system (internal)

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 aborted:0 carrier:0

collisions:0

RX bytes:0 TX bytes:0

port 1: br-ex (internal)

RX packets:0 errors:0 dropped:4285 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 aborted:0 carrier:0

collisions:0

RX bytes:0 TX bytes:0

port 2: br-int (internal)

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 aborted:0 carrier:0

collisions:0

RX bytes:0 TX bytes:0

port 3: tap7e86de1c-70

RX packets:407 errors:0 dropped:0 overruns:0 frame:0

TX packets:38 errors:0 dropped:0 aborted:0 carrier:0

collisions:0

RX bytes:30048 (29.3 KiB) TX bytes:2668 (2.6 KiB)

port 4: tapc6517e8f-b0

RX packets:4 errors:0 dropped:0 overruns:0 frame:0

TX packets:16 errors:0 dropped:0 aborted:0 carrier:0

collisions:0

RX bytes:360 TX bytes:1216 (1.2 KiB)

port 5: tapf147ff8d-9b

RX packets:1592 errors:0 dropped:0 overruns:0 frame:0

TX packets:119 errors:0 dropped:0 aborted:0 carrier:0

collisions:0

RX bytes:115182 (112.5 KiB) TX bytes:6126 (6.0 KiB)

For the moment, I stopped the procedure at this level. I come back quickly. If you have some idea to help me to find connectivity between the OpenStack Host and instances VM, you are welcome !

Ressources

Website: https://www.kypo.cz/

Documentation: https://docs.crp.kypo.muni.cz/

Gitlab: https://gitlab.ics.muni.cz/muni-kypo-crp

Twitter: https://twitter.com/KYPOCRP

Hello François.

Very nice quick start guide. Here are a few notes:

1. kypo-head instance size is too low for working kypo (2CPU and 8GB RAM is minimum for deployment, 12 GB RAM for building sandboxes, recommended 16 GB RAM)

2. stack$ unset « ${!OS_@} » – should be right before – stack$ source app-cred-kypo-openrc.sh – otherwise cli won’t work (it unsets classical keystone credentials)

3. you can skip this – stack$ openstack security group rule list default

4. « I think the problem can be in relation with the Ubuntu Firewall and with Security Groups. » – What seems to be a problem? I didn’t notice any failed task in you guide.

Hi Team KYPO CRP,

THank’s for your comment in order to help me.

I modified the end of the article to clearly display what is the problem.

1. Ok to modify the size of the instances

2. OK to move unset « ${!OS_@}»

3. OK to skip « security group rule list »

4. The problem is that after OpenStack server reboot, I lost connectivity between Openstask host and instances VM. Ping and SSH was NOK. After several researches, I found that a Devstack environment is not persistent across server reboots. I restarted all openstack servcices but connectivity stay KO. I think internal open vswitch is running correctly but i’m not really sure.

Hi Francois,

Thanks for the guide. It really helped me get started with the kypo deployment.

Currently, I am stuck while running ./create-base.sh

Getting the below error!

ResourceInError: resources.kypo-proxy-jump: Went to status ERROR due to « Message: No valid host was found. , Code: 500 »

Can you please suggest what could be the issue? I am new to openstack

Hi Sri,

I had the same problem. The problem means, that OpenStack couldn’t find any hypervisor, which matched the OpenStack’s scheduler filters. You can find the source of problems in log file of Nova component.

Example: if your virtual machine has 4 CPU and 8 GB RAM, you can use only them. That means if you create flavors for instances kypo-head and kypo-proxy, use for example:

openstack flavor create –ram 2048 –disk 20 –vcpus 1 csirtmu.tiny1x2

openstack flavor create –ram 4096 –disk 40 –vcpus 2 csirtmu.small2x4

That is 3 CPU and 6 GB RAM in total. Then change the file openstack-defaults.sh according to the instructions (for kypo-head use csirtmu.small2x4 and for kypo-proxy use csirtmu.small1x2). If you use for creating instances more than 4 CPU or 8 GB, you will get error. Try to change parameters according to your machine.

Hi Tony,

Thanks, it worked but now getting another error.

Exhausted all hosts available for retrying build failures for instance 0db11ab6-ca3e-476d-b423-b26d00e884e1., Code: 500″

The machine has enough resources. Can you please suggest what could be done?

Got stuck there too. In an VM Environment Virtualize Intel VT-x/EPT must be activated in the Hypervisor. (VirtualBox > Machine Settings -> System -> Processor -> activate « Nested VT-x/AMD-V »

You can also check the comment on this post version (EN) : https://www.spacesecurity.info/en/install-kypo-cyber-range-platform-on-openstack/#comment-1431